Introduction

The inherently dynamic nature of human communication entails adaptability in both spoken and written discourse, as individuals navigate diverse contexts and audiences. This linguistic malleability, commonly referred to as style, encompasses many of textual attributes including but not limited to formality, politeness, diction, and emotional tenor. Text style transfer (TST), a long-standing endeavor within the field of natural language processing (NLP), seeks to transform specific stylistic attributes while preserving the fundamental meaning of the text. This complex task holds significant potential for enhancing various downstream applications, such as machine translation, where it can facilitate the interpretation of stylistically complex texts; text simplification, which aims to render complex content accessible to a broader audience; and formality transfer, a process that enables the adaptation of text to suit diverse social contexts. The table below illustrates several representative examples of such transformations.

| Original text | Stylized text |

|---|---|

| (Rude) What the hell is wrong with your attitude? | (Polite) Perhaps the question is more about your attitude. |

| (Positive) Apple’s new chip worths it’s price. | (Negative) Apple’s new chip is to expensive to afford. |

Methodology

In this project, I aimed to leverage large pre-trained language models to approach the TST task with transformer models. After experimenting with a naive seq2seq model based on T5 [@raffel_exploring_2020] with a language model head and a Generator-Discriminator network that enables adversarial training [implementing @dai_style_2019], I ultimately developed and trained a T5-based conditional generation model with a language model head and a style extractor encoder, which performs significantly better than the GAN model and appears more flexible than the naive seq2seq fine-tuning approach. Proposed by Riley et al., this more recent approach features few-shot tunable targeted restyling and style extraction [@riley_textsettr_2021].

Model Architecture

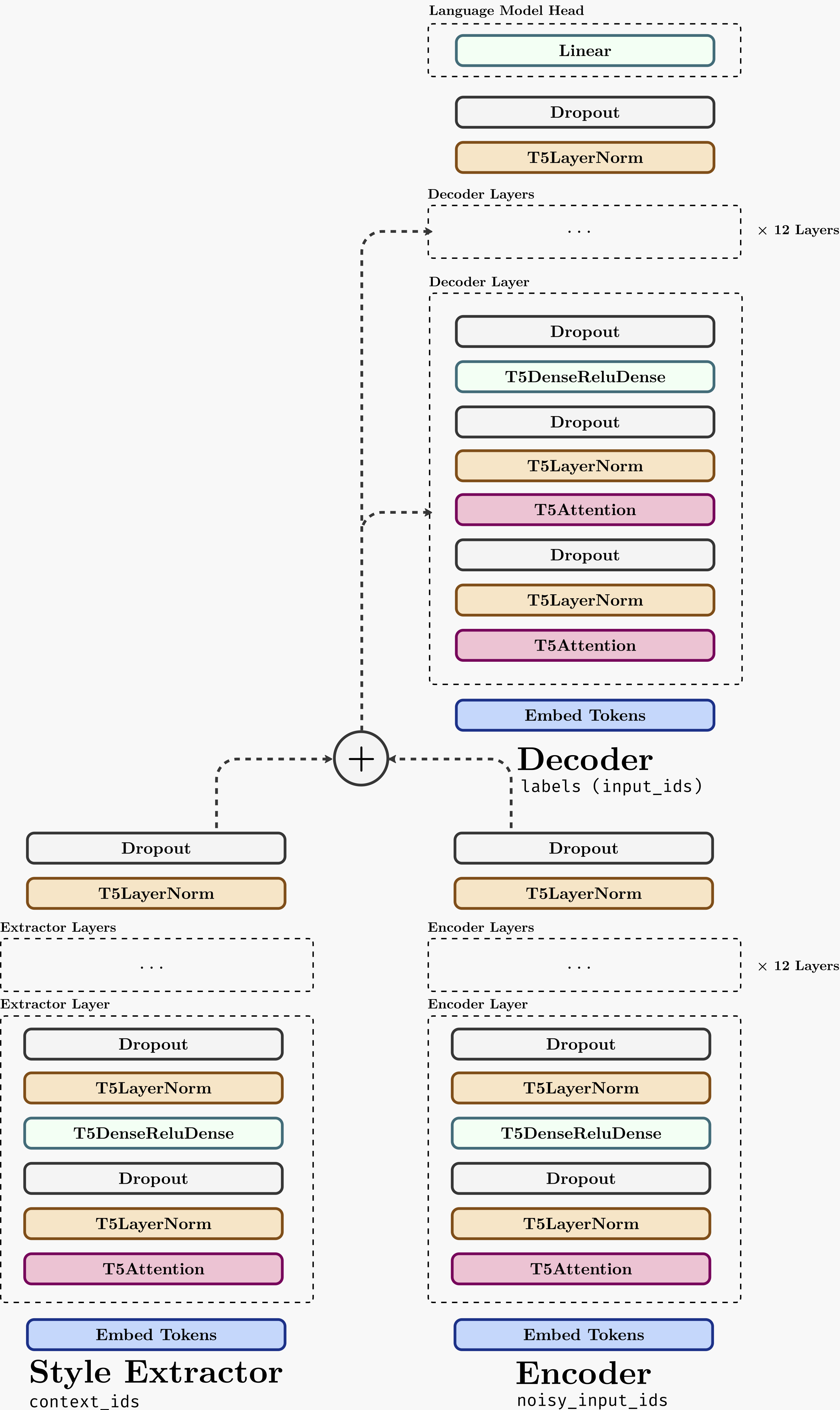

As the source code is not openly released, I implemented a T5ForConditionalGenerationWithExtractor model by extending the vanilla T5 model from the huggingface/transformers repository. Following the TextSETTR model architecture, multiple changes were made (e.g., noise generation function, extractor specifications, and hyperparameters) to reproduce the paper’s result with limited computational resources. Ultimately, I achieved a working and likely the first third-party implementation of a T5-based text style extraction model. The model definition is as follows.

In my implementation, the encoder and decoder are identical to those in the T5-base model, and two additional modules are appended to the encoder-decoder network—a style extractor and a language model head. The style extractor is, in my implementation, a deep copy of the encoder module (weights and biases not tied). Before training, the extractor module is initialized with weights identical to those of the encoder. The language model head is a single-layer feed-forward network projecting the hidden states to the vocabulary size. The last hidden states of the encoder and the style extractor are combined via addition and fed into the decoder’s attentions. Under the assumption that adjacent sentences in the same context share the same style, the style extractor will be able to extract the style of the context sentence, as facilitated by a noisy reconstruction task. More details about the training/inference process will be discussed in later sections.

Data Preparation

The T5 with style extractor model is trained on a subset of the Amazon Review Data (2018) dataset. As my experiment setup could not handle entire dataset, I sampled one million lines from the 5-core sub-dataset. The dataset is preprocessed by only taking reviews with ≥ 2 sentences, where each sentence has 30 or more characters. I implemented a PyTorch Dataset class to load the dataset and preprocess the data. For every pair of sentences in the dataset, the first sentence is used as the context and the second sentence as the input. Eventually, the data preprocessor tokenizes the sentences using the original T5 tokenizer, splits the data into training, validation, and testing datasets, and packages all the data loaders into a PyTorch Lightning DataModule for batching and training.

Training

The training of T5ForConditionalGenerationWithExtractor is unsupervised and facilitated by a reconstruction task. I implemented helper functions to add noise to the input sentence (one for adding noisy tokens (40%) and another for randomly dropping tokens (20%)). Like the original paper’s method, I also employed the Noisy Back Translation technique (same amount as the mechanical noises) to improve transfer accuracy. The diagram above illustrates the data flow of the training process. The preceding sentence tokens are used for the context, and the following sentence tokens with Drop/Add + NBT noise are used as the input IDs. With the training goal being to reconstruct the noisy text based on the context style, the ground truth sentences are directly fed into the decoder for a cross-entropy loss atop the linear language model head. For hyperparameters, I used the AdamW optimizer with a learning rate of 1e-3 and PyTorch Lightning’s automatic scheduler. The batch size is set to 64, and the maximum number of tokens is set to 32. Since I have access to only one P100 GPU on Google Colab and there are over 332 million trainable parameters, I trained the model for 2 epochs. Hopefully, this is sufficient for a proof of concept.

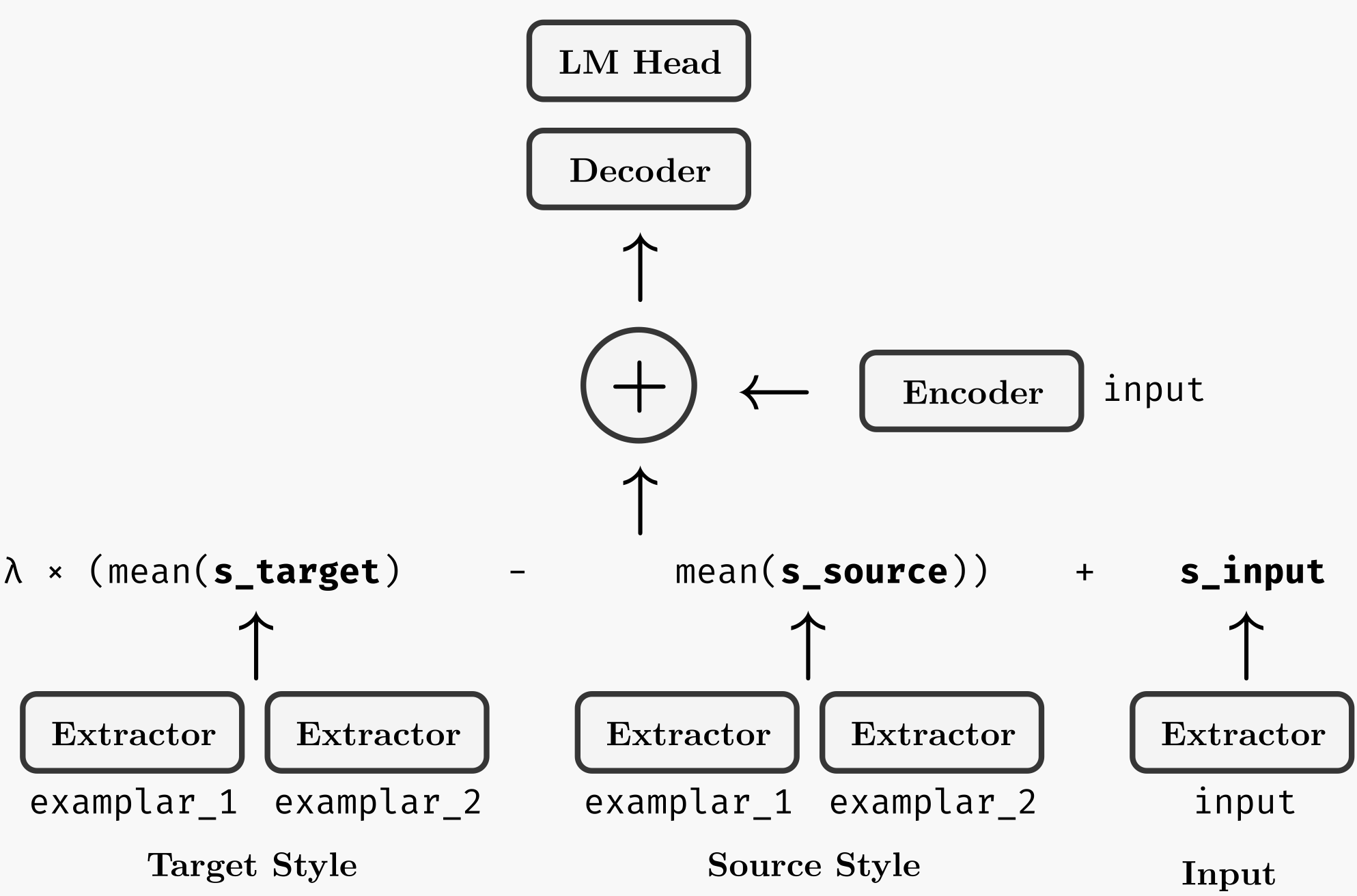

In inference mode, the strategy illustrated in the figure above is used to transfer text styles on a few-shot basis. First, we choose a target style and a source style similar to the input style. We feed the extractor with exemplars for both styles and obtain the corresponding style embeddings. By subtracting the target style embedding from the source style embedding, we get a style vector pointing towards the target style. In this way, by adding the style direction to the extracted input style, we can obtain the transferred style. The style vector is then summed with the input encoder’s final hidden states to get the final embedding, which is to be decoded. After a greedy search for the language model output, we get the predicted output sentence.

Results

| Source | Result |

|---|---|

| I hereby commit to never purchase anything from this institution in the future. | I’m hereby gonna never buy from this seller again. |

| I couldn’t figure out what the author was trying to say. | (Formal) I couldn’t figure out what exactly the author was trying to say. |

| (Informal) I couldnt figure out what the author was trying to say. |

| Prompt | Emotional | Reserved |

|---|---|---|

| My favorite movie is \(\cdots\) | My favorate movie is awesome. My Favorate Movie is amazing! | My favorate movie is the movie which is great. |

| Please get \(\cdots\) | Please get it! | Please get it. Please do get this. |

| Prompt | Negative | Positive |

|---|---|---|

| The product is \(\cdots\) | The product is defective. | The product is good The Product is excellent |

| Apple watch is \(\cdots\) | Apple watch is useless. | Apple watch is amazing. |

| The University of California at San Diego is \(\cdots\) | The University of California at San Diego is a dead zone. | The University of California at San Diego is beautiful |

The table above showcases some results of the model. For a qualitative evaluation, I tested the model on several tasks. The first group demonstrates the model’s performance in the formal ↔︎ informal transfer task, and all the outputs are generated by conditioning on only 3 exemplars for each style. We can observe that the first result is quite remarkable, and the second result illustrates the flexibility of transfer directions (target style not necessarily ≠ input style)—for the formal → informal direction, notice that it spells “couldn’t” as “couldnt,” a hallmark of informal language.

The second group of examples shows that besides seq2seq, this model is capable of conditional generation. Provided with a prompt and conditioned on only 3 examples in each style category, the generated texts differ by their level of emotion.

Another important task of text style transfer is sentiment transfer. However, it appears that the model performs poorly on the seq2seq sentiment transfer task (where the model almost always changes a minimal number of words). Nonetheless, when used in a conditional generation setting, we can see that the outputs are both accurate and consistent.1

Nonetheless, from the examples above, one can also observe that in some instances, the generation accuracy is questionable, and occasionally, the generation fluency is not ensured. Notably, the model is prone to repetition. This is likely limited by the amount of training data (I only used less than 1/100 of the original dataset) and the insufficient training steps. Contrary to the original paper, I used the T5-base model instead of T5-large due to limited CUDA memory—this could potentially impair the power of the language model.

Conclusion

In this project, attempts were made to implement a transformer-based text style transfer model. I tested multiple approaches and ultimately decided to implement a few-shot T5 style extractor model. Based on the vanilla T5 model provided by Hugging Face, I modified lower-level architectures and trained the model on preprocessed data in an unsupervised manner. Results demonstrate that the model is capable of transferring and generating texts with different styles, and its performance is validated on several tasks. Although the results are not as impressive as the state-of-the-art model, the model is remarkable in that it is trained on non-parallel data and can generalize to various tasks even when provided with only a few exemplars. This underscores the power of large, transformer-based pre-trained language models: with relatively minor modifications to the architecture and limited steps of pretraining, they can easily be generalized to diverse tasks.

Style Exemplars Used

formal_exemplars = [

"This was a remarkably thought-provoking read.",

"It is certainly amongst my favorites."

"We humbly request your presence at our gala on the 12th."

]

informal_exemplars = [

"reading this rly makes u think",

"Its def one of my favs",

"come swing by our bbq next week if ya can make it"

]

reserved_exemplars = [

"No thank you, I'd prefer not to.",

"This game could have been better designed.",

"Do you know why they might have delayed the launch?",

"Sorry, I wasn' certain if you were joking."

]

emotional_exemplars = [

"Hell no, you can't make me do that.",

"This game is such a piece of garbage!",

"Why in god's name would they delay the damn launch? Are you frigging kidding me?"

]For exemplars of styles used in the few-shot inference, see the appendix. Positive and negative exemplars were sampled (n=100) from the Yelp review polarity dataset..↩︎